The goal of this vignette is to familiarize you with the basics of sfdep:

- creating neighbors

- creating weights

- calculating a LISA

Intro / what is spatial relationship

sfdep provides users with a way to conduct “Exploratory Spatial Data Analysis”, or ESDA for short. ESDA differs from typical exploratory data analysis in that we are strictly exploring spatial relationships. As you might have guessed, ESDA evaluates whether the phenomena captured in your data are dependent upon space–or are spatially auto-correlated. Much of ESDA is focused on “Local Indicators of Spatial Association”, LISAs for short. LISAs are measures that are developed to identify whether some observed pattern is truly random or impacted by its relationship in space.

Much of the philosophy of LISAs and ESDA are captured in Tobler’s First Law of Geography

“Everything is related to everything else. But near things are more related than distant things.” - Waldo R. Tobler, 1969

It’s tough to state this any more simply. Things that are next to each other tend to be more similar than things that are further away.

To assess whether near things are related and further things less so, we typically lattice data. A lattice is created when a landscape or region is divided into sub-areas. Most naturally, these types of data are represented as vector polygons.

Neighbors

To describe neighbors I’m going to steal extensively from my own post “Understanding Spatial Autocorrelation”.

If we assume that there is a spatial relationship in our data, we are taking on the belief that our data are not completely independent of each other. If nearer things are more related, then census tracts that are close to each other will have similar values.

In order to evaluate whether nearer things are related, we must know what observations are nearby. With polygon data we identify neighbors based on their contiguity. To be contiguous means to be connected or touching—think of the contiguous lower 48 states.

Contiguities

The two most common contiguities are based on the game of chess. Let’s take a simple chess board.

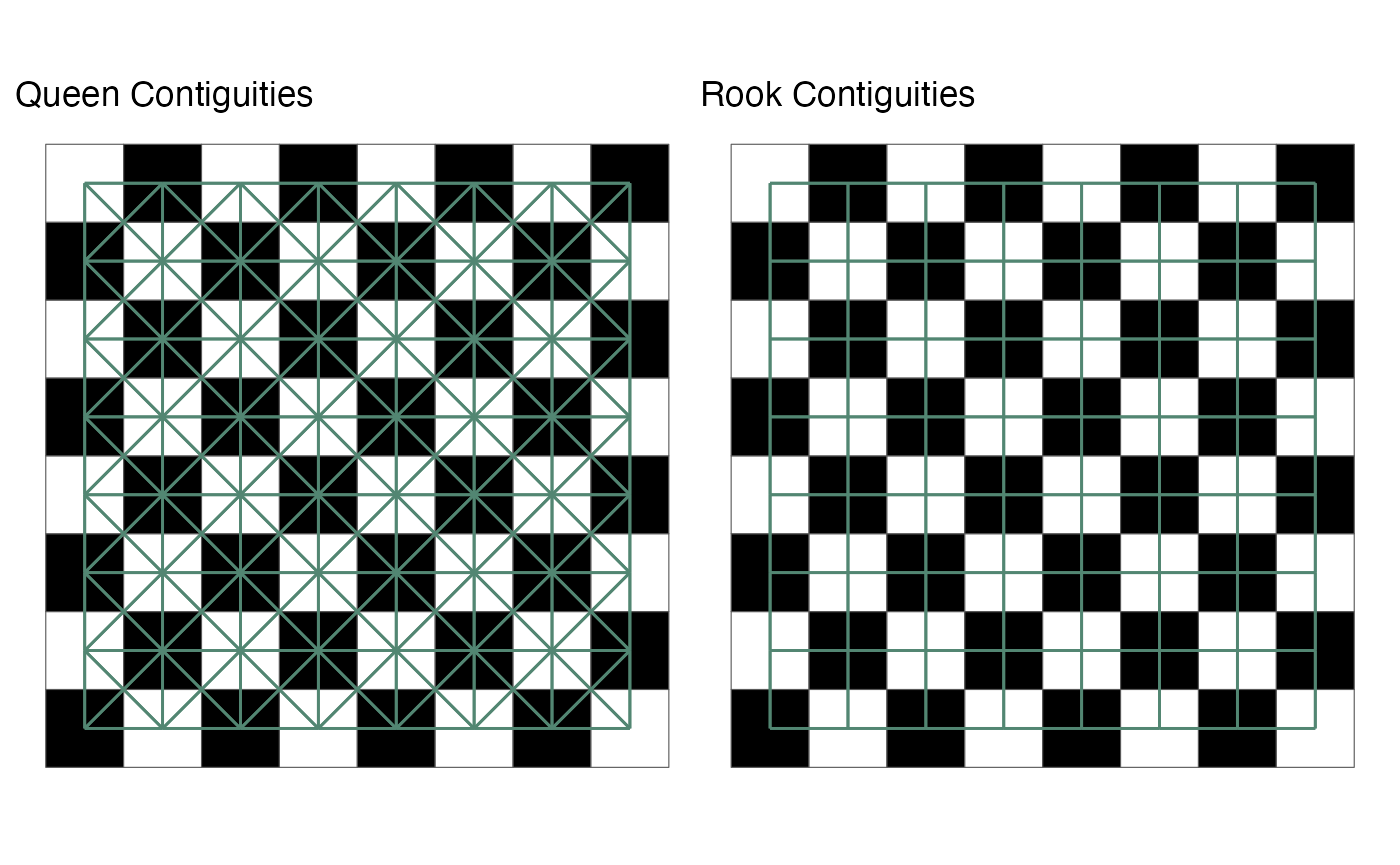

In chess each piece can move in a different way. All pieces, with the exception of the knight, move either diagonally or horizontally and vertically. The most common contiguities are queen and rook contiguities. In chess, a queen can move diagonally and horizontal and vertically whereas a rook can only move horizontal and vertically.

We extend this idea to polygons. Queen contiguities identify neighbors based on any polygon that is touching. With rook contiguities, we identify neighbors based on polygons that touch on the side. For most social science research, we only need to be concerned with queen contiguities.

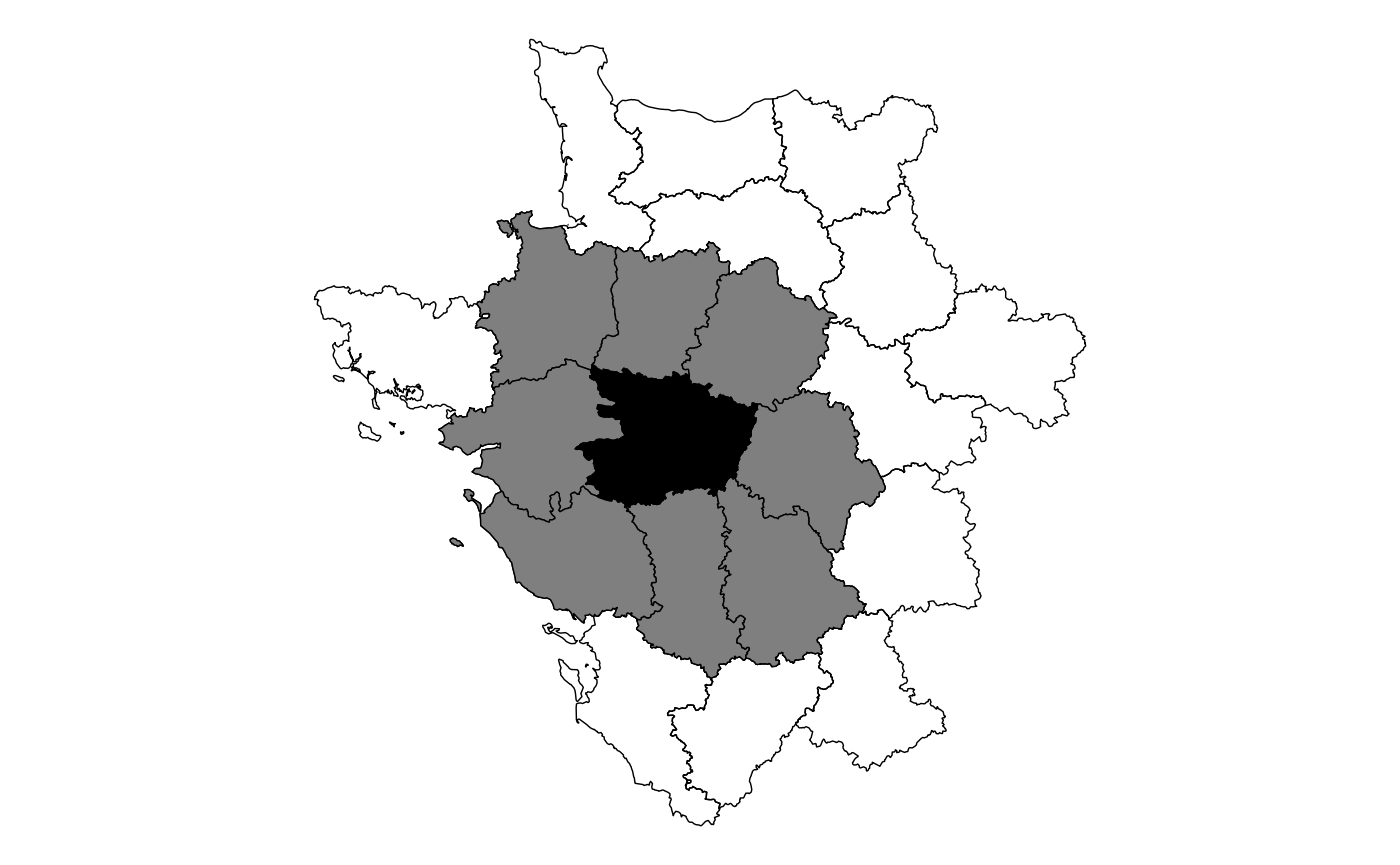

While a chess board might make intuitive sense, geographies are

really wonky in real life. Below is map of the 47th observation in the

guerry object and it’s queen contiguity neighbors.

You can see that any polygon that is touching, even at a corner, will be considered a neighbor to the point in question. This will be done for every polygon in our data set.

Understanding the spatial weights

Once neighbors are identified, they can then be used to calculate

spatial weights. The typical method of calculating the

spatial weights is through row standardization

(st_weights(nb, style = "W")). Each neighbor that touches

our census tract will be assigned an equal weight. We do this by

assigning each neighbor a value of 1 then dividing by the number of

neighbors. If we have 5 neighboring census tracts, each of them will

have a spatial weight of 0.2 (1 / 5 = 0.2).

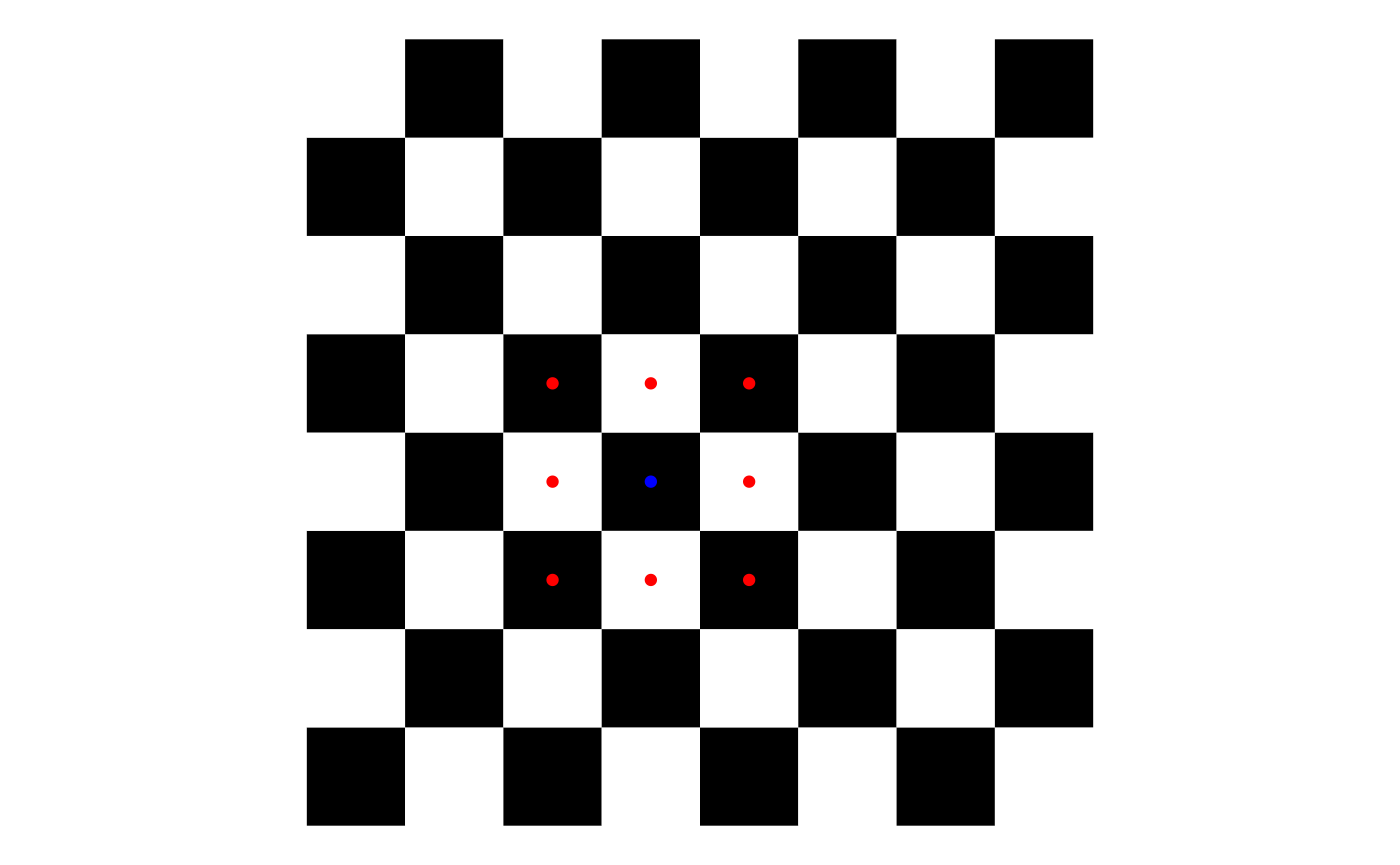

Going back to the chess board example, we can take the position d4 and look at the queen contiguities. There are 8 squares that immediately touch the square. Each one of these squares is considered a neighbor and given a value of 1. Then each square is divided by the total number or neighbors, 8.

Very simply it looks like the following

Very simply it looks like the following

Creating Neighbors and Weights

sfdep utilizes list objects for both neighbors and weights. The neighbors and weights lists.

To identify contiguity-based neighbors, we use

st_contiguity() on the sf geometry column. And to calculate

the weights from the neighbors list, we use st_weights() on

the resultant neighbors list. By convention these are typically called

nb and wt.

These lists can be created line by line or within a pipe. The most common usecase is likely via a dplyr pipeline.

guerry_nb <- guerry %>%

mutate(nb = st_contiguity(geometry),

wt = st_weights(nb),

.before = 1) # to put them in the front

guerry_nb

#> Simple feature collection with 85 features and 28 fields

#> Geometry type: MULTIPOLYGON

#> Dimension: XY

#> Bounding box: xmin: 47680 ymin: 1703258 xmax: 1031401 ymax: 2677441

#> CRS: NA

#> # A tibble: 85 × 29

#> nb wt code_dept count ave_id_geo dept region department crime_pers

#> * <nb> <lis> <fct> <dbl> <dbl> <int> <fct> <fct> <int>

#> 1 <int [4]> <dbl> 01 1 49 1 E Ain 28870

#> 2 <int [6]> <dbl> 02 1 812 2 N Aisne 26226

#> 3 <int [6]> <dbl> 03 1 1418 3 C Allier 26747

#> 4 <int [4]> <dbl> 04 1 1603 4 E Basses-Al… 12935

#> 5 <int [3]> <dbl> 05 1 1802 5 E Hautes-Al… 17488

#> 6 <int [7]> <dbl> 07 1 2249 7 S Ardeche 9474

#> 7 <int [3]> <dbl> 08 1 35395 8 N Ardennes 35203

#> 8 <int [3]> <dbl> 09 1 2526 9 S Ariege 6173

#> 9 <int [5]> <dbl> 10 1 34410 10 E Aube 19602

#> 10 <int [5]> <dbl> 11 1 2807 11 S Aude 15647

#> # ℹ 75 more rows

#> # ℹ 20 more variables: crime_prop <int>, literacy <int>, donations <int>,

#> # infants <int>, suicides <int>, main_city <ord>, wealth <int>,

#> # commerce <int>, clergy <int>, crime_parents <int>, infanticide <int>,

#> # donation_clergy <int>, lottery <int>, desertion <int>, instruction <int>,

#> # prostitutes <int>, distance <dbl>, area <int>, pop1831 <dbl>,

#> # geometry <MULTIPOLYGON>Calculating LISAs

To calculate LISAs we typically will provide a numeric object(s), a

neighbor list, and a weights list–and often the argument

nsim to determine the number of simulations to run. Most

LISAs return a data frame of the same number of rows as the input

dataframe. The resultant data frame can be unnested, or columns hoisted

for ease of analysis.

For example to calculate the Local Moran we use the function

local_moran()

lisa <- guerry_nb %>%

mutate(local_moran = local_moran(crime_pers, nb, wt, nsim = 199),

.before = 1)

lisa

#> Simple feature collection with 85 features and 29 fields

#> Geometry type: MULTIPOLYGON

#> Dimension: XY

#> Bounding box: xmin: 47680 ymin: 1703258 xmax: 1031401 ymax: 2677441

#> CRS: NA

#> # A tibble: 85 × 30

#> local_moran$ii nb wt code_dept count ave_id_geo dept region department

#> * <dbl> <nb> <lis> <fct> <dbl> <dbl> <int> <fct> <fct>

#> 1 0.522 <int> <dbl> 01 1 49 1 E Ain

#> 2 0.828 <int> <dbl> 02 1 812 2 N Aisne

#> 3 0.804 <int> <dbl> 03 1 1418 3 C Allier

#> 4 0.742 <int> <dbl> 04 1 1603 4 E Basses-Al…

#> 5 0.231 <int> <dbl> 05 1 1802 5 E Hautes-Al…

#> 6 0.839 <int> <dbl> 07 1 2249 7 S Ardeche

#> 7 0.623 <int> <dbl> 08 1 35395 8 N Ardennes

#> 8 1.65 <int> <dbl> 09 1 2526 9 S Ariege

#> 9 -0.0198 <int> <dbl> 10 1 34410 10 E Aube

#> 10 0.695 <int> <dbl> 11 1 2807 11 S Aude

#> # ℹ 75 more rows

#> # ℹ 32 more variables: local_moran$eii <dbl>, $var_ii <dbl>, $z_ii <dbl>,

#> # $p_ii <dbl>, $p_ii_sim <dbl>, $p_folded_sim <dbl>, $skewness <dbl>,

#> # $kurtosis <dbl>, $mean <fct>, $median <fct>, $pysal <fct>,

#> # crime_pers <int>, crime_prop <int>, literacy <int>, donations <int>,

#> # infants <int>, suicides <int>, main_city <ord>, wealth <int>,

#> # commerce <int>, clergy <int>, crime_parents <int>, infanticide <int>, …Now that we have a data frame, we need to unnest it.

lisa %>%

tidyr::unnest(local_moran)

#> Simple feature collection with 85 features and 40 fields

#> Geometry type: MULTIPOLYGON

#> Dimension: XY

#> Bounding box: xmin: 47680 ymin: 1703258 xmax: 1031401 ymax: 2677441

#> CRS: NA

#> # A tibble: 85 × 41

#> ii eii var_ii z_ii p_ii p_ii_sim p_folded_sim skewness

#> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

#> 1 0.522 -0.0463 0.364 0.943 0.346 0.36 0.18 0.0488

#> 2 0.828 -0.0382 0.112 2.59 0.00967 0.03 0.015 0.393

#> 3 0.804 -0.0242 0.165 2.04 0.0416 0.1 0.05 0.292

#> 4 0.742 0.0382 0.263 1.37 0.170 0.14 0.07 0.0239

#> 5 0.231 -0.00316 0.0307 1.34 0.181 0.14 0.07 -0.115

#> 6 0.839 -0.0234 0.336 1.49 0.137 0.16 0.08 -0.0348

#> 7 0.623 0.0680 1.53 0.449 0.653 0.66 0.33 -0.00121

#> 8 1.65 -0.0783 0.892 1.83 0.0678 0.07 0.035 -0.0656

#> 9 -0.0198 0.000993 0.000436 -0.993 0.321 0.32 0.16 -0.197

#> 10 0.695 0.0238 0.0859 2.29 0.0219 0.02 0.01 0.114

#> # ℹ 75 more rows

#> # ℹ 33 more variables: kurtosis <dbl>, mean <fct>, median <fct>, pysal <fct>,

#> # nb <nb>, wt <list>, code_dept <fct>, count <dbl>, ave_id_geo <dbl>,

#> # dept <int>, region <fct>, department <fct>, crime_pers <int>,

#> # crime_prop <int>, literacy <int>, donations <int>, infants <int>,

#> # suicides <int>, main_city <ord>, wealth <int>, commerce <int>,

#> # clergy <int>, crime_parents <int>, infanticide <int>, …This can then be used for visualization or further analysis.

Additionally, for other LISAs that can take any number of inputs, e.g. 3 or more numeric variables, we provide this as a list. Take for example the Local C statistic.

guerry_nb %>%

mutate(local_c = local_c_perm(list(crime_pers, wealth), nb, wt),

.before = 1) %>%

tidyr::unnest(local_c)

#> Simple feature collection with 85 features and 38 fields

#> Geometry type: MULTIPOLYGON

#> Dimension: XY

#> Bounding box: xmin: 47680 ymin: 1703258 xmax: 1031401 ymax: 2677441

#> CRS: NA

#> # A tibble: 85 × 39

#> ci cluster e_ci var_ci z_ci p_ci p_ci_sim p_folded_sim skewness

#> <dbl> <fct> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

#> 1 1.53 Positive 2.44 0.665 -1.11 0.266 0.284 0.142 0.176

#> 2 0.500 Positive 1.74 0.387 -1.99 0.0465 0.02 0.01 0.511

#> 3 0.642 Positive 1.70 0.251 -2.12 0.0341 0.024 0.012 0.105

#> 4 0.324 Positive 2.27 0.834 -2.13 0.0328 0.02 0.01 0.129

#> 5 0.298 Positive 2.33 0.971 -2.06 0.0396 0.008 0.004 0.264

#> 6 1.60 Positive 3.38 0.708 -2.11 0.0353 0.036 0.018 0.193

#> 7 2.04 Positive 3.22 1.60 -0.931 0.352 0.372 0.186 0.272

#> 8 2.20 Positive 3.40 1.74 -0.905 0.366 0.392 0.196 0.207

#> 9 0.507 Positive 1.68 0.367 -1.95 0.0518 0.04 0.02 0.196

#> 10 1.46 Positive 1.72 0.371 -0.424 0.671 0.684 0.342 0.263

#> # ℹ 75 more rows

#> # ℹ 30 more variables: kurtosis <dbl>, nb <nb>, wt <list>, code_dept <fct>,

#> # count <dbl>, ave_id_geo <dbl>, dept <int>, region <fct>, department <fct>,

#> # crime_pers <int>, crime_prop <int>, literacy <int>, donations <int>,

#> # infants <int>, suicides <int>, main_city <ord>, wealth <int>,

#> # commerce <int>, clergy <int>, crime_parents <int>, infanticide <int>,

#> # donation_clergy <int>, lottery <int>, desertion <int>, instruction <int>, …